Linux risc-v head.S调试记录

调试准备

gdb调试

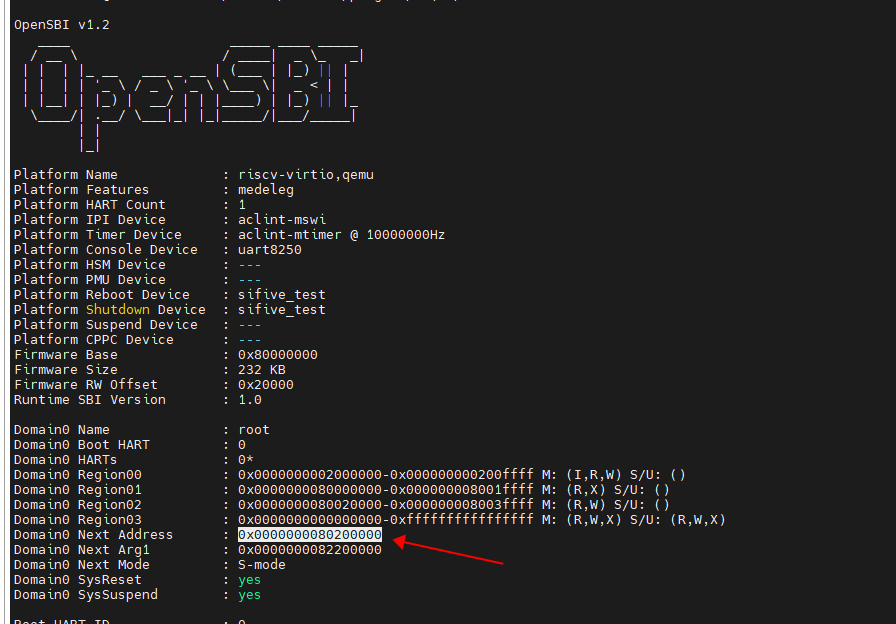

0x0000000080200000为opensbi跳转执行的物理地址,linux内核的img会加载到这个地址运行,如果要在这个地方断点,就b *(0x0000000080200000),没法对head.S中进行断点,因为linux加载后会变成虚拟地址。调试的符号需要把CONFIG_DEBUG_INFO=y打开。

li s4,-13

j _start_kernel //j 0x802010cc

_start_kernel:

//关闭所有的中断

csrw sie,zero

csrw sip,zero

//加载gp指针

la gp, __global_pointer$

//关掉浮点

li t0, SR_FS

csrc CSR_STATUS, t0

//挑选一个主核来运行初始化的代码,其他核跳转到Lsecondary_park等待(进入wfi)

li t0, CONFIG_NR_CPUS

blt a0, t0, .Lgood_cores

tail .Lsecondary_park

.Lgood_cores:

//低电量检查?

la a3, hart_lottery

li a2,1

amoadd.w a3,a2, (a3)

bnez a3, .Lsecondary_start

直接使用物理地址断点

调试linux

CONFIG_DEBUG_INFO=y

b *(0x0000000080200000)

file linux-5.18/vmlinux

linux内核在没使能mmu前的代码如何打断点?

只能打代码所在的物理地址如b *(0x0000000080200000)

怎么确定代码的符号的物理地址?

- info address setup_vm 获得符号的虚拟地址

- riscv64-unknown-linux-gnu-readelf -h vmlinux 获取内核镜像的入口虚拟地址

- 获取内核镜像的加载所在物理地址

- 目标符号物理地址 = 符号虚拟地址-镜像入口虚拟地址 + 镜像加载的物理地址

info address setup_vm

Symbol "setup_vm" is a function at address 0xffffffff80804a82.

目标物理地址=0xffffffff80804a82-0xffffffff80000000+0x0000000080200000

b *(0x80A04A82)

符号断点

riscv64-unknown-linux-gnu-readelf -h vmlinux 获取内核镜像的入口虚拟地址,然后使用riscv64-unknown-linux-gnu-readelf -S vmlinux获取各个段相对起始段的偏移,各个段的偏移+镜像加载的物理地址即是符号的地址,最后使用add-symbol-file添加符号。

riscv64-unknown-linux-gnu-readelf -S vmlinux

There are 38 section headers, starting at offset 0xb3cc860:

Section Headers:

[Nr] Name Type Address Offset

Size EntSize Flags Link Info Align

[ 0] NULL 0000000000000000 00000000

0000000000000000 0000000000000000 0 0 0

[ 1] .head.text PROGBITS ffffffff80000000 00001000

0000000000001e90 0000000000000000 AX 0 0 4096

[ 2] .text PROGBITS ffffffff80002000 00003000

000000000065bdb0 0000000000000000 AX 0 0 4

[ 3] .init.text PROGBITS ffffffff80800000 00800000

0000000000035132 0000000000000000 AX 0 0 2097152

[ 4] .exit.text PROGBITS ffffffff80835138 00835138

0000000000001996 0000000000000000 AX 0 0 2

[ 5] .init.data PROGBITS ffffffff80a00000 00837000

0000000000014e38 0000000000000000 WA 0 0 4096

[ 6] .data..percpu PROGBITS ffffffff80a15000 0084c000

00000000000084f8 0000000000000000 WA 0 0 64

[ 7] .alternative PROGBITS ffffffff80a1d4f8 008544f8

0000000000000288 0000000000000000 A 0 0 1

[ 8] .rodata PROGBITS ffffffff80c00000 00855000

0000000000195710 0000000000000000 WA 0 0 64

如.text段相对偏移为ffffffff80002000-ffffffff80000000=0x2000,因此符号添加位置为

0x80200000 +0x2000=0x80202000

(gdb) add-symbol-file linux-5.18/vmlinux -s .head.text 0x80200000 -s .text 0x80202000 -s .rodata 0x80e00000 -s .init.text 0x80a00000 -s .init.data 0x80c00000

add symbol table from file "linux-5.18/vmlinux" at

.head.text_addr = 0x80200000

.text_addr = 0x80202000

.rodata_addr = 0x80e00000

.init.text_addr = 0x80a00000

.init.data_addr = 0x80c00000

(y or n) y

Reading symbols from linux-5.18/vmlinux...

struct kernel_mapping {

unsigned long page_offset;

unsigned long virt_addr;

uintptr_t phys_addr;

uintptr_t size;

/* Offset between linear mapping virtual address and kernel load address */

unsigned long va_pa_offset;

/* Offset between kernel mapping virtual address and kernel load address */

unsigned long va_kernel_pa_offset;

unsigned long va_kernel_xip_pa_offset;

#ifdef CONFIG_XIP_KERNEL

uintptr_t xiprom;

uintptr_t xiprom_sz;

#endif

};

printk调试

未使能MMU如何使用printf?

想要在setup_vm里面调用打印,发现这个阶段是不能使用printk的,因为mmu还没开启。可以调用到opensbi进行打印。

+static void _sbi_ecall(int ext, int fid, unsigned long arg0,

+ unsigned long arg1, unsigned long arg2,

+ unsigned long arg3, unsigned long arg4,

+ unsigned long arg5)

+{

+

+ register uintptr_t a0 asm ("a0") = (uintptr_t)(arg0);

+ register uintptr_t a1 asm ("a1") = (uintptr_t)(arg1);

+ register uintptr_t a2 asm ("a2") = (uintptr_t)(arg2);

+ register uintptr_t a3 asm ("a3") = (uintptr_t)(arg3);

+ register uintptr_t a4 asm ("a4") = (uintptr_t)(arg4);

+ register uintptr_t a5 asm ("a5") = (uintptr_t)(arg5);

+ register uintptr_t a6 asm ("a6") = (uintptr_t)(fid);

+ register uintptr_t a7 asm ("a7") = (uintptr_t)(ext);

+ asm volatile ("ecall"

+ : "+r" (a0), "+r" (a1)

+ : "r" (a2), "r" (a3), "r" (a4), "r" (a5), "r" (a6), "r" (a7)

+ : "memory");

+}

+

+static void _sbi_console_putchar(int ch)

+{

+ _sbi_ecall(0x1, 0, ch, 0, 0, 0, 0, 0);

+}

+

+static void uart_puts(char *s)

+{

+ while(*s) {

+ _sbi_console_putchar(*s++);

+ }

+}

+static int _vsnprintf(char * out, size_t n, const char* s, va_list vl)

+{

+ int format = 0;

+ int longarg = 0;

+ size_t pos = 0;

+ for (; *s; s++) {

+ if (format) {

+ switch(*s) {

+ case 'l': {

+ longarg = 1;

+ break;

+ }

+ case 'p': {

+ longarg = 1;

+ if (out && pos < n) {

+ out[pos] = '0';

+ }

+ pos++;

+ if (out && pos < n) {

+ out[pos] = 'x';

+ }

+ pos++;

+ }

+ case 'x': {

+ long num = longarg ? va_arg(vl, long) : va_arg(vl, int);

+ int hexdigits = 2*(longarg ? sizeof(long) : sizeof(int))-1;

+ for(int i = hexdigits; i >= 0; i--) {

+ int d = (num >> (4*i)) & 0xF;

+ if (out && pos < n) {

+ out[pos] = (d < 10 ? '0'+d : 'a'+d-10);

+ }

+ pos++;

+ }

+ longarg = 0;

+ format = 0;

+ break;

+ }

+ case 'd': {

+ long num = longarg ? va_arg(vl, long) : va_arg(vl, int);

+ if (num < 0) {

+ num = -num;

+ if (out && pos < n) {

+ out[pos] = '-';

+ }

+ pos++;

+ }

+ long digits = 1;

+ for (long nn = num; nn /= 10; digits++);

+ for (int i = digits-1; i >= 0; i--) {

+ if (out && pos + i < n) {

+ out[pos + i] = '0' + (num % 10);

+ }

+ num /= 10;

+ }

+ pos += digits;

+ longarg = 0;

+ format = 0;

+ break;

+ }

+ case 's': {

+ const char* s2 = va_arg(vl, const char*);

+ while (*s2) {

+ if (out && pos < n) {

+ out[pos] = *s2;

+ }

+ pos++;

+ s2++;

+ }

+ longarg = 0;

+ format = 0;

+ break;

+ }

+ case 'c': {

+ if (out && pos < n) {

+ out[pos] = (char)va_arg(vl,int);

+ }

+ pos++;

+ longarg = 0;

+ format = 0;

+ break;

+ }

+ default:

+ break;

+ }

+ } else if (*s == '%') {

+ format = 1;

+ } else {

+ if (out && pos < n) {

+ out[pos] = *s;

+ }

+ pos++;

+ }

+ }

+ if (out && pos < n) {

+ out[pos] = 0;

+ } else if (out && n) {

+ out[n-1] = 0;

+ }

+ return pos;

+}

+

+

+static char out_buf[1000]; // buffer for _vprintf()

+static int _vprintf(const char* s, va_list vl)

+{

+ int res = _vsnprintf(NULL, -1, s, vl);

+ if (res+1 >= sizeof(out_buf)) {

+ uart_puts("error: output string size overflow\\n");

+ while(1) {}

+ }

+ _vsnprintf(out_buf, res + 1, s, vl);

+ uart_puts(out_buf);

+ return res;

+}

+

+int _printf(const char* s, ...)

+{

+ int res = 0;

+ va_list vl;

+ va_start(vl, s);

+ res = _vprintf(s, vl);

+ va_end(vl);

+ return res;

+}

+

pgd_t early_pg_dir[PTRS_PER_PGD] __initdata __aligned(PAGE_SIZE);

/* Number of entries in the page global directory */

#define PTRS_PER_PGD (PAGE_SIZE / sizeof(pgd_t))

/* Number of entries in the page table */

#define PTRS_PER_PTE (PAGE_SIZE / sizeof(pte_t))

PAGE_SIZE = 4096

sizeof(pgd_t) = 8

PTRS_PER_PGD = 512

PGDIR_SIZE = 0x8000000000 ---512G

try satp模式

static __init void set_satp_mode(void)

{

u64 identity_satp, hw_satp;

uintptr_t set_satp_mode_pmd = ((unsigned long)set_satp_mode) & PMD_MASK;

bool check_l4 = false;

//下面是对set_satp_mode到set_satp_mode+4M代码空间做虚拟地址到物理地址映射,即填充

//这段空间的页表,P4D/PUD/PMD

create_p4d_mapping(early_p4d,

set_satp_mode_pmd, (uintptr_t)early_pud,

P4D_SIZE, PAGE_TABLE);

create_pud_mapping(early_pud,

set_satp_mode_pmd, (uintptr_t)early_pmd,

PUD_SIZE, PAGE_TABLE);

/* Handle the case where set_satp_mode straddles 2 PMDs */

// 处理 `set_satp_mode` 跨越两个PMD的情况,即set_satp_mode开始+4M的空间恒等映射

//PMD_SIZE = 2MB,0x200000,其实也不用这么大,1个表项也够了。

create_pmd_mapping(early_pmd,

set_satp_mode_pmd, set_satp_mode_pmd,

PMD_SIZE, PAGE_KERNEL_EXEC);

create_pmd_mapping(early_pmd,

set_satp_mode_pmd + PMD_SIZE,

set_satp_mode_pmd + PMD_SIZE,

PMD_SIZE, PAGE_KERNEL_EXEC);

retry:

//默认先按照5级页表来设置

create_pgd_mapping(early_pg_dir,

set_satp_mode_pmd,

check_l4 ? (uintptr_t)early_pud : (uintptr_t)early_p4d,

PGDIR_SIZE, PAGE_TABLE);

identity_satp = PFN_DOWN((uintptr_t)&early_pg_dir) | satp_mode;

local_flush_tlb_all();

csr_write(CSR_SATP, identity_satp);

//设置satp, satp_mode = a/9, early_pg_dir[512]为L0页表项目

//执行该代码后,就使能了MMU,后续取指令的地址,就需要经过MMU转化才是实际的物理地址。

//这也是为什么前面要做set_satp_mode的恒等映射(虚拟地址=物理地址)。

//如果前面不进行恒等映射,MMU在转化过程中查询不到页表,就会进入异常

hw_satp = csr_swap(CSR_SATP, 0ULL); //这条代码是虚拟地址运行。

//将 0ULL 写入 CSR_SATP 寄存器,这样就相当又关闭了MMU。

//将 CSR_SATP 寄存器的旧值返回,并赋给变量 hw_satp。

local_flush_tlb_all();

//satp先默认设置为10,即Sv57 5级页表,如果硬件支持5级页表SATP写入时返回的值hw_satp

//即为设置的值identity_satp,如果不等,则是4级页表。

if (hw_satp != identity_satp) {

if (!check_l4) {

disable_pgtable_l5();

check_l4 = true;

memset(early_pg_dir, 0, PAGE_SIZE);

goto retry;

}

disable_pgtable_l4();

}

memset(early_pg_dir, 0, PAGE_SIZE);

memset(early_p4d, 0, PAGE_SIZE);

memset(early_pud, 0, PAGE_SIZE);

memset(early_pmd, 0, PAGE_SIZE);

}

static void __init disable_pgtable_l5(void)

{

pgtable_l5_enabled = false;

kernel_map.page_offset = PAGE_OFFSET_L4;

satp_mode = SATP_MODE_48;

}

//说明不支持Sv57模式,5级页表,更新page offset和satp_mode

static void __init disable_pgtable_l4(void)

{

pgtable_l4_enabled = false;

kernel_map.page_offset = PAGE_OFFSET_L3;

satp_mode = SATP_MODE_39;

}

//说明不支持Sv48,4级页表,更新page offset和satp_mode,本章的实验是支持4级页表。

set_satp_mode作用是从5级/4级页表开始进行尝试硬件是否支持,判断的方法是如果当前的等级支持,那么satp寄存器是可以写入的,如果不支持寄存器将无法写入。

前面create_p4d_mapping/create_pud_mapping/create_pmd_mapping三个函数的作用是先将set_satp_mode这段代码开始的4M(2个PMD页表项)范围进行恒等映射(虚拟地址=物理地址),之所以要这么做是因为一旦写satp寄存使能mmu后,当前运行的地址就会变成虚拟地址,该地址会经过mmu转化为实际的加载地址,而如果mmu在转化过程中查询不到该地址对应的页表,就会发生异常。因此先将set_satp_mode这段代码的页表填充好,做好虚拟地址到物理地址的映射,在使能MMU后,代码可以继续接着运行。

- Sv64:6级页表

- Sv57: 5级页表

- Sv48:4级页表

- Sv39:3级页表

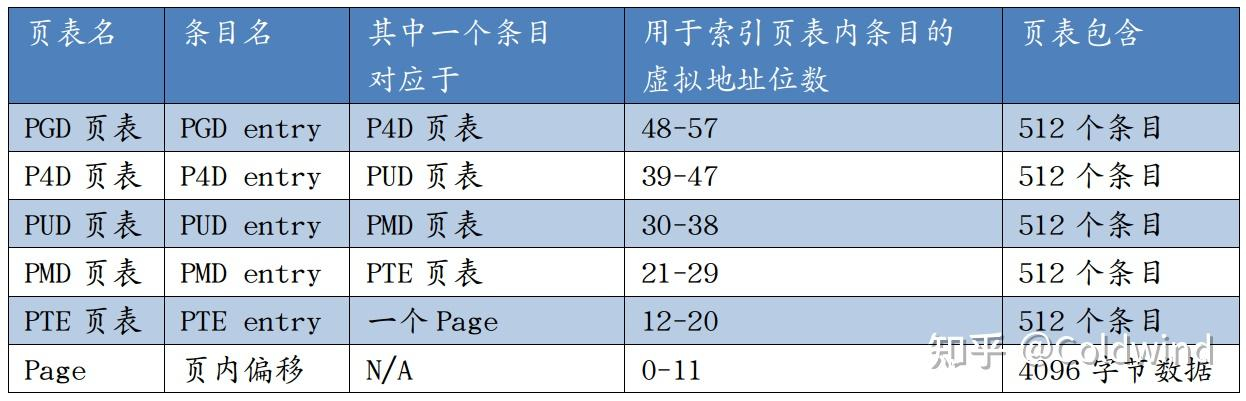

5级页表结构

使能MMU

relocate_enable_mmu:

/* Relocate return address */

la a1, kernel_map //将符号kernel_map的地址加载到寄存器a1

XIP_FIXUP_OFFSET a1 //修正a1的偏移,这通常是用于处理执行在位置无关代码(XIP)的情况。

REG_L a1, KERNEL_MAP_VIRT_ADDR(a1) //从虚拟地址中加载值到寄存器a1,这样我们就有了kernel_map的虚拟地址。

la a2, _start //将符号_start的地址加载到寄存器a2。

sub a1, a1, a2 //计算kernel_map - _start的偏移量,并存储在a1中。

add ra, ra, a1 //将返回地址ra加上前面计算的偏移量,重新定位返回地址。

//将返回地址从物理地址转化为虚拟地址

/* Point stvec to virtual address of intruction after satp write */

la a2, 1f //将标签1f(即稍后定义的标签1)的地址加载到a2中。

add a2, a2, a1 //将标签的地址加上偏移量,得到它的虚拟地址。

csrw CSR_TVEC, a2 //将a2的值写入CSR_TVEC寄存器,设置异常向量表基地址。

//异常后将跳转到1f的位置,1f是虚拟地址。

/* Compute satp for kernel page tables, but don\'t load it yet */

srl a2, a0, PAGE_SHIFT //将a0右移PAGE_SHIFT位,计算页号(Page Number)

la a1, satp_mode //将satp_mode的地址加载到寄存器a1。

REG_L a1, 0(a1) //从satp_mode地址处加载一个值到寄存器a1,即或stap_mode的值

or a2, a2, a1 //将页号和模式组合起来,生成最终的 satp 值,但还不加载它。

/*

* Load trampoline page directory, which will cause us to trap to

* stvec if VA != PA, or simply fall through if VA == PA. We need a

* full fence here because setup_vm() just wrote these PTEs and we need

* to ensure the new translations are in use.

*/

la a0, trampoline_pg_dir //将trampoline_pg_dir的地址加载到寄存器a0。

XIP_FIXUP_OFFSET a0 //修正a0的偏移

srl a0, a0, PAGE_SHIFT //将a0右移PAGE_SHIFT位,计算页号。

or a0, a0, a1 //将页号和模式组合起来,生成最终的 satp 值。

sfence.vma //执行虚地址翻译的同步指令,确保所有之前的变更生效。

csrw CSR_SATP, a0 //将a0的值写入CSR_SATP寄存器,加载 trampoline 页目录。

//这里使能了MMU,那么下一条指令 地址PC+8需要经过MMU转化后地址进行

//访问,而此时PC+8的地址是没有映射页表的,那么地址就会变成非法而

//进入异常

.align 2

1: //以该物理地址来取指\"标号1的指令\",异常 -----这里非常巧妙

//PC跳转到\"CSR_TVEC的值\",以标号1的虚拟地址来取指\"标号1的指令\",正常,往下执行

/* Set trap vector to spin forever to help debug */

la a0, .Lsecondary_park

csrw CSR_TVEC, a0 //将 trap 向量设置为.Lsecondary_park。

/* Reload the global pointer */

.option push

.option norelax

la gp, __global_pointer$

.option pop

/*

* Switch to kernel page tables. A full fence is necessary in order to

* avoid using the trampoline translations, which are only correct for

* the first superpage. Fetching the fence is guaranteed to work

* because that first superpage is translated the same way.

*/

csrw CSR_SATP, a2

sfence.vma

ret

asmlinkage void __init setup_vm(uintptr_t dtb_pa)

{

pmd_t __maybe_unused fix_bmap_spmd, fix_bmap_epmd;

kernel_map.virt_addr = KERNEL_LINK_ADDR;

kernel_map.page_offset = _AC(CONFIG_PAGE_OFFSET, UL);

_printf(\"%s,%d,virt_addr:%lx, offset:%lx\\n\",__func__,__LINE__,

KERNEL_LINK_ADDR, kernel_map.page_offset);

#ifdef CONFIG_XIP_KERNEL

kernel_map.xiprom = (uintptr_t)CONFIG_XIP_PHYS_ADDR;

kernel_map.xiprom_sz = (uintptr_t)(&_exiprom) - (uintptr_t)(&_xiprom);

phys_ram_base = CONFIG_PHYS_RAM_BASE;

kernel_map.phys_addr = (uintptr_t)CONFIG_PHYS_RAM_BASE;

kernel_map.size = (uintptr_t)(&_end) - (uintptr_t)(&_sdata);

kernel_map.va_kernel_xip_pa_offset = kernel_map.virt_addr - kernel_map.xiprom;

#else

kernel_map.phys_addr = (uintptr_t)(&_start);

kernel_map.size = (uintptr_t)(&_end) - kernel_map.phys_addr;

#endif

_printf(\"%s,%d,_start:%lx, _end:%lx\\n\",__func__,__LINE__,&_start, &_end);

_printf(\"%s,%d,phys_addr:%lx, size:%lx\\n\",__func__,__LINE__,

kernel_map.phys_addr, kernel_map.size);

#if defined(CONFIG_64BIT) && !defined(CONFIG_XIP_KERNEL)

set_satp_mode();

//设置satp寄存器,确定几级页表

#endif

_printf(\"%s,%d,_start:%lx, _end:%lx\\n\",__func__,__LINE__,&_start, &_end);

_printf(\"%s,%d,phys_addr:%lx, size:%lx\\n\",__func__,__LINE__,

kernel_map.phys_addr, kernel_map.size);

#if defined(CONFIG_64BIT) && !defined(CONFIG_XIP_KERNEL)

set_satp_mode();

#endif

_printf(\"%s,%d,page offset%lx, kernel_map.page_offset:%lx\\n\",

__func__,__LINE__,PAGE_OFFSET, kernel_map.page_offset);

kernel_map.va_pa_offset = PAGE_OFFSET - kernel_map.phys_addr;

kernel_map.va_kernel_pa_offset = kernel_map.virt_addr - kernel_map.phys_addr;

riscv_pfn_base = PFN_DOWN(kernel_map.phys_addr);

_printf(\"%s,%d,va_pa_offset:%lx, va_kernel_pa_offset:%lx, pfn_base:%lx\\n\",__func__,__LINE__,

kernel_map.va_pa_offset, kernel_map.va_kernel_pa_offset, riscv_pfn_base);

/*

* The default maximal physical memory size is KERN_VIRT_SIZE for 32-bit

* kernel, whereas for 64-bit kernel, the end of the virtual address

* space is occupied by the modules/BPF/kernel mappings which reduces

* the available size of the linear mapping.

*/

memory_limit = KERN_VIRT_SIZE - (IS_ENABLED(CONFIG_64BIT) ? SZ_4G : 0);

_printf(\"%s,%d,memory_limit:%lx\\n\",__func__,__LINE__, memory_limit);

/* Sanity check alignment and size */

BUG_ON((PAGE_OFFSET % PGDIR_SIZE) != 0);

BUG_ON((kernel_map.phys_addr % PMD_SIZE) != 0);

#ifdef CONFIG_64BIT

/*

* The last 4K bytes of the addressable memory can not be mapped because

* of IS_ERR_VALUE macro.

*/

BUG_ON((kernel_map.virt_addr + kernel_map.size) > ADDRESS_SPACE_END - SZ_4K);

#endif

pt_ops_set_early();

/* Setup early PGD for fixmap */

_printf(\"%s,%d FIXADDR_START:%lx,fixmap_pgd_next :%lx, PGDIR_SIZE:%lx\\n\",__func__,__LINE__,

FIXADDR_START, fixmap_pgd_next, PGDIR_SIZE);

//因为一旦使能MMU,就是取指令的地址不是实际的运行地址,而是需要经过MMU转化才能访问

//如果要访问DTB、访问IO就不能访问,因为查询不到对应的页表,因为内存管理系统还没有初始化,

//也不能动态分配内存来作为页表填充,那么就因此内核引入fixmap,事先

//分配一段虚拟地址空间,然后给其虚拟地址创建号PGD/PUD/PMD的页表,PTE的页表等那个模块

//(如DTB)使用了再进行填充,这样通过fixmap这段虚拟地址就可以查询页表访问到物理内存。

//当前fixmap主要是用于访问设备树dtb的。

//FIXADDR_START~FIXADDR_TOP这段虚拟地址范围是固定的,用于映射到FDT/EARLYCON/IO

//填充fixmap的PGD页表,fixmap虚拟地址对应的页表项中存储的是fixmap_pgd_next,下一级

//页表的地址,如果是5级页表就是fixmap_p4d[PTRS_PER_P4D],如果是4级页表就是

//fixmap_pud[PTRS_PER_PUD],本章实验是4级页表。

create_pgd_mapping(early_pg_dir, FIXADDR_START,

fixmap_pgd_next, PGDIR_SIZE, PAGE_TABLE);

#ifndef __PAGETABLE_PMD_FOLDED

_printf(\"%s,%d,pgtable_l5_enabled: %lx,pgtable_l5_enabled:%lx \\n\",

__func__,__LINE__,pgtable_l5_enabled,pgtable_l4_enabled);

/* Setup fixmap P4D and PUD */

if (pgtable_l5_enabled)

create_p4d_mapping(fixmap_p4d, FIXADDR_START,

(uintptr_t)fixmap_pud, P4D_SIZE, PAGE_TABLE);

/* Setup fixmap PUD and PMD */

if (pgtable_l4_enabled)

create_pud_mapping(fixmap_pud, FIXADDR_START,

(uintptr_t)fixmap_pmd, PUD_SIZE, PAGE_TABLE);

create_pmd_mapping(fixmap_pmd, FIXADDR_START,

(uintptr_t)fixmap_pte, PMD_SIZE, PAGE_TABLE);

/* Setup trampoline PGD and PMD */

create_pgd_mapping(trampoline_pg_dir, kernel_map.virt_addr,

trampoline_pgd_next, PGDIR_SIZE, PAGE_TABLE);

if (pgtable_l5_enabled)

create_p4d_mapping(trampoline_p4d, kernel_map.virt_addr,

(uintptr_t)trampoline_pud, P4D_SIZE, PAGE_TABLE);

if (pgtable_l4_enabled)

create_pud_mapping(trampoline_pud, kernel_map.virt_addr,

(uintptr_t)trampoline_pmd, PUD_SIZE, PAGE_TABLE);

#ifdef CONFIG_XIP_KERNEL

create_pmd_mapping(trampoline_pmd, kernel_map.virt_addr,

kernel_map.xiprom, PMD_SIZE, PAGE_KERNEL_EXEC);

#else

create_pmd_mapping(trampoline_pmd, kernel_map.virt_addr,

kernel_map.phys_addr, PMD_SIZE, PAGE_KERNEL_EXEC);

#endif

#else

/* Setup trampoline PGD */

create_pgd_mapping(trampoline_pg_dir, kernel_map.virt_addr,

kernel_map.phys_addr, PGDIR_SIZE, PAGE_KERNEL_EXEC);

#endif

/*

* Setup early PGD covering entire kernel which will allow

* us to reach paging_init(). We map all memory banks later

* in setup_vm_final() below.

*/

create_kernel_page_table(early_pg_dir, true);

/* Setup early mapping for FDT early scan */

create_fdt_early_page_table(early_pg_dir, dtb_pa);

/*

* Bootime fixmap only can handle PMD_SIZE mapping. Thus, boot-ioremap

* range can not span multiple pmds.

*/

BUG_ON((__fix_to_virt(FIX_BTMAP_BEGIN) >> PMD_SHIFT)

!= (__fix_to_virt(FIX_BTMAP_END) >> PMD_SHIFT));

#ifndef __PAGETABLE_PMD_FOLDED

/*

* Early ioremap fixmap is already created as it lies within first 2MB

* of fixmap region. We always map PMD_SIZE. Thus, both FIX_BTMAP_END

* FIX_BTMAP_BEGIN should lie in the same pmd. Verify that and warn

* the user if not.

*/

fix_bmap_spmd = fixmap_pmd[pmd_index(__fix_to_virt(FIX_BTMAP_BEGIN))];

fix_bmap_epmd = fixmap_pmd[pmd_index(__fix_to_virt(FIX_BTMAP_END))];

if (pmd_val(fix_bmap_spmd) != pmd_val(fix_bmap_epmd)) {

WARN_ON(1);

pr_warn(\"fixmap btmap start [%08lx] != end [%08lx]\\n\",

pmd_val(fix_bmap_spmd), pmd_val(fix_bmap_epmd));

pr_warn(\"fix_to_virt(FIX_BTMAP_BEGIN): %08lx\\n\",

fix_to_virt(FIX_BTMAP_BEGIN));

pr_warn(\"fix_to_virt(FIX_BTMAP_END): %08lx\\n\",

fix_to_virt(FIX_BTMAP_END));

pr_warn(\"FIX_BTMAP_END: %d\\n\", FIX_BTMAP_END);

pr_warn(\"FIX_BTMAP_BEGIN: %d\\n\", FIX_BTMAP_BEGIN);

}

#endif

_printf(\"%s,%d start pt_ops_set_fixmap\\n\",__func__,__LINE__);

pt_ops_set_fixmap();

_printf(\"%s,%d end pt_ops_set_fixmap\\n\",__func__,__LINE__);

}

移植6.1 kernel到riscv上,setup_vm_final->create_pgd_mapping->memset

在对该地址进行memset 时,触发scause: 7(Store/AMO access fault)异常?

能帮忙一起看看吗?